Kuvastin[ˈkuʋɑ̝s̠tin] - a Finnish word meaning ‘mirror’ or ‘imager’

If you just want to run the code, it is available on Github.

Introduction

My dad died recently. Way before his time - and suddenly. I am still coming to terms with it, and the funny thing about grieving is that people do it in different ways.

I like building things and working on projects. It helps me process. It’s one of the things my dad taught me - when clearing his things I found some old PCB patterns on transparency for a microcontroller programmer. He had worked on it with his work mates and the code and circuit board diagrams were circulated using internal work mail. There was some dodgy german txt file claiming its the fastest simplest programmer out there. Good on you dad - he actually built it as I found the finished product. That dodgy circuit board and the passion to learn eventually lead him to teach me how to program.

So, this project was both to honour his memory, do something physical of my own in turn. Since starting to write this though OpenAI has begun imploding heavily so here’s hoping all the code still works when you read it.

Testing the idea

A while ago I saw the extremely cool Project E Ink display. I liked the minimalist approach, but I don’t really read physical news papers nor do I want to spend €2,300.

Discussing it with friends, I threw an offhand comment about how it would be cool to use ChatGPT to prompt a diffusion AI to generate wall art. I argued it would be cool to have a painting that changed shape every day based on what was happening. I think everyone thought I was just being a bit mad and possibly drunk, so we moved on.

The next day the idea didn’t leave me so I looked into it. I’ve done some tinkering on diffusion models so I figured it would be possible to have ChatGPT or similar create some interesting output based on my calendar. I felt 18th century print styles would work with E Ink Displays, as they are low resolution, stylised, and there’s plentiful samples out there ensuring a consisten style. To begin with, I tried it with just a prompt directly in chatGPT.

1 | You: |

It responded with

1 | ChatGPT: |

Holy crap! That seems plausible! So I asked it to generate it and…

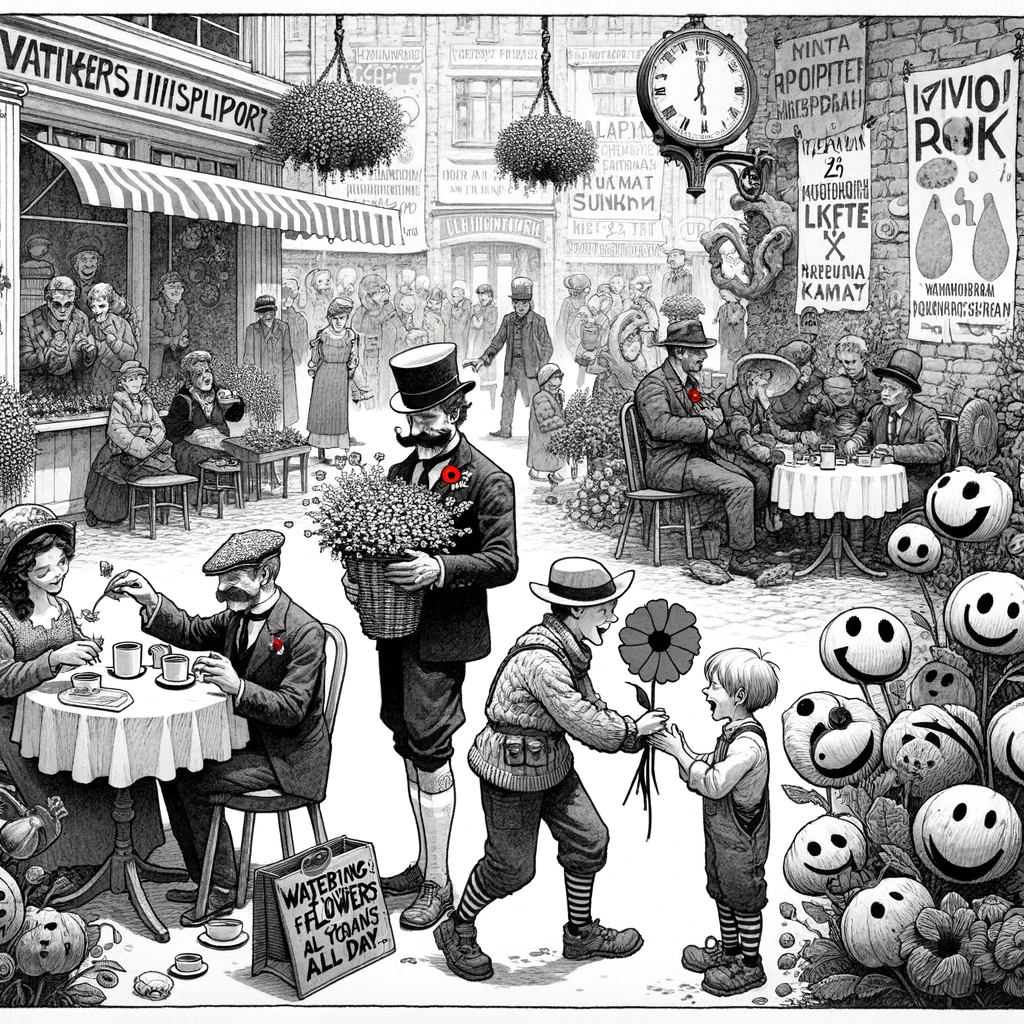

I love the poppies in reference to Rememberance Sunday

Not too shabby! It used about the right style, about the right colours and looks to be set in about the right time. This is perfect for me - I have seen a million electronic calendars that tell me facts about my day. I’m sick of those, I just wanted something that would illustrate and entertain not directly inform. I figured in this way AI hallucinations would actually work to my advantage - taking artistic liberties with such mundane things as my Car’s upcoming MOT.

AI as a service is still annoying

I’m not a fan of DALL-E per se, Midjourney does a much better job at creating images true to the fashion of the era. However, Midjourney does not have an API nor are they happy for you to fudge it yourself, Stable Diffusion on my local machine was inconsistent and I really didn’t want to build an API for it nor find a way to host it in some colab monstrosity. I figured for what I wanted DALL-E 3 would do well enough.

Let’s use my real calendar

So the next step was to have an excerpt of my actual calendar. Luckily, I use google apps so I could use their APIs. After clicking through the quick start, I had the list I needed.

So, I took the output and fed it with a similar prompt to ChatGPT. Diffuser models like you to be extremely specific regarding style, composition and any other element. I also wanted to find some whimsical, allegorical or otherwise meaningful detials in the picture.

1 | Today is Nov 12. Imagine you are a master prompt maker for dalle. You specialise in creating |

What’s also cool is you can also ask ChatGPT to come up with a title based on the prompt it generates - which I figured would come in handy later.

1 | User |

To further refine the style I wanted to see, I turned back to Midjourney which has an excellent feature called describe. It produces a description of what an image looks like to the model and keywords to use. I used it to further refine my prompt. I fed it a bunch of pictures of old litographs and maps and it gave me stuff like this back:

1 | 1️⃣ an engraving of a man holding a chair, in the style of high quality photo, weathercore, |

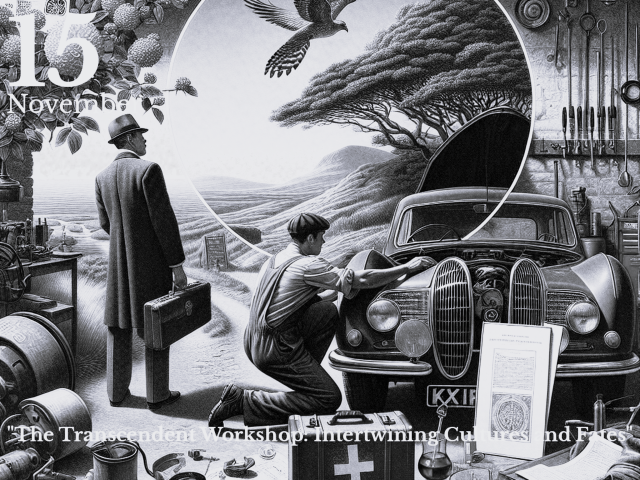

These could then be fed back to the prompt refining the style of the image. What came out was AWESOME and is just so close to what I was imagining in my head.

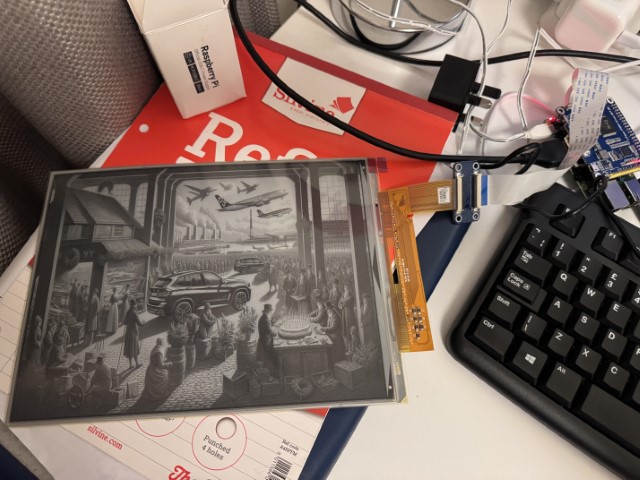

I love it picked up the car from the MOT confirmation email. The aesthetic and slight grain on the picture will look great on eink.

Putting the concept to code

So far, after an hour or so all of the work had happened just in the ChatGPT UI and on Midjourney’s discord. It was clear the idea had legs, so it was time to code it all out. OpenAI has a nice python sdk that helps you shortcut the work. I hope they don’t completely implode or that MS does something similar! Since I was just prototyping I didn’t bother creating a nice portable set of functions nor classes, I just extended the googl calendar quick start script because that’s the way I roll.

1 | from openai import OpenAI |

Prompting ChatGPT is easy. You can give it a system prompt that describes it’s role and a user prompt which is essentially what you would type in the ChatGPT window. What comes back is a response object that contains the response string. I chose to use GPT-4 despite the increased cost per tokens because I wanted the increased creativity for the artful prompt. I think it’s worth the few cents.

Once we have a response, I simply feed the prompt response to another call to generate an image

1 | # Generate the image |

This call can return either a URL to the image, or a base64 encrypted image in a json. Initially, I used URL returns, but quickly noticed that it was bothersome to download the image as the request would be declined due to permissions. Also, the image kept getting deleted quite quickly, so I had no other option than to fish in the dark corners of Stack Overflow on how to get things to work.

1 | image = Image.open(BytesIO(b64decode(data))) |

Assembling the hardware

So, it worked! Here I was, realising I had run to a wall without some physical hardware. So, I ordered what I needed to make this a reality. I wanted the image to be displayed on an E Ink display as large as could be delivered relatively quickly. In my case, I ended up next-day delivering all components which added a bit of extra cost on top. If you have no such issues you can use the below components.

| Item | Price |

|---|---|

| WaveShare 10.3inc EInk Display HAT | £140 |

| Raspberry Pi 4B 4GB | £35 |

| Power brick | £9 |

| Generic 128GB SD Card | £9.90 |

| A Generic A4 sized Picture Frame with inline Frame | £2.50 |

| SUM | £196.4 |

Monday - I remember why I hate hardware

The next day, to my great surprise, every component had arrived and was exactly as described. This was Awesome. The display especially is the largest you could get without doing some serious haggling on aliexpress. It’s about the size of a A5 sheet of but ever-so-slightly taller and narrower. Not the 32” monstrosity I had planned for but definitely good enough for the mantlepiece.

The first hurdle was that in my excitement I failed to realise, RPi 4s and onward only ship with Mini-HDMIs. I forgot to order the adapter. Oh well, Luckily, I had an old Pi 3 embedded in another project, so I went ahead and cannibalized it.

The real challenge was to get the E Ink display to work. It came with a HAT shield which lit up when plugged to the Pi so I assumed something worked at least. The docs online were cryptic, but a few hours of trying and failing with different projects and samples I found the official wiki for my display.

The rought steps of the process I followed are as follows:

- Install BCM2835 Libraries

- Enable the SPI interface on the raspberry pi

- Download some random software, don’t follow the wget route, just github clone

- Compile the software which turns out to be code and demonstration of the display

I hate that drivers for hardware still come from some random domain somewhere in a mysterious tarball. Thanks mikem for your bmc2835 drivers - whatever they do! Still, I wish vendors would do better. The good news was executing the epd program complied by the sample project made the display turn alive and cycle through a demonstration. WOHOO!

The next problem was the fact all the sample code was in C, and I wanted to remain in ez mode in python. I had seen other waveshare repos contain python code, but for this controller - only C was available. Crap.

Google to the rescue

So, once again I descended on google. Turns out there’s a brilliant project called omni-epd that turned out to be exactly what I needed.

Installing it on the RPi was relatively straightforward:

1 | pip3 install git+https://github.com/robweber/omni-epd.git#egg=omni-epd |

What’s neat is that the build here installs everything you need, including the drivers. So, I tried running some code and it failed. Nothing happened. The prompt was right, but nothing simply worked. I rebooted the Pi, nothing worked still. I was about to lose hope, when a magical github issue I have since lost the link to simply told me to run a command to restart the SPI controller on the RPi.

So I did. And IT WORKED!

Omni-epd has sample code to draw an image in this file.

1 | import sys |

And that is literally the only feature I was looking for. Even better, the thing just worked! I had found a way to load up my unhinged AI Picture.

Putting the device together

Since it was working , it was time to move the display to the frame. I had hastily guesstimated a A4 frame would be good enough to fit the display, so I deployed the sophisticated method of unrolling the painter’s tape I had lying around to firmly attach the display to the frame.

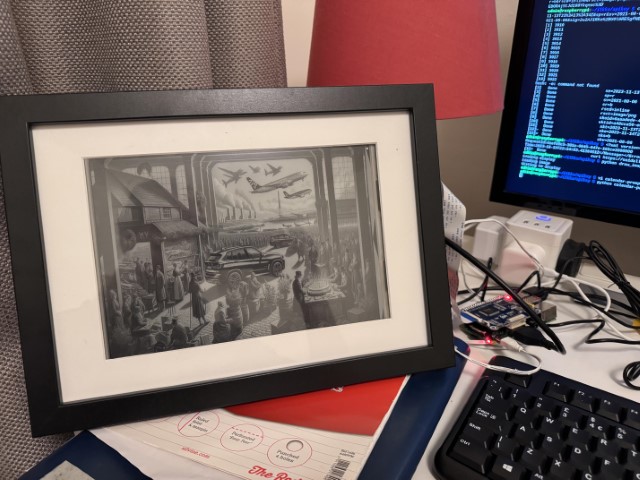

Just ignore the guts spilling out, they got taped to the back later

It seriously looks way better than I hoped. The image style, combined with the E ink really makes it look like a real print ripped from the pages of a book. The frame masks the display electronics well, and even though it isn’t all hidden, it kind of looks like it belongs. I totally did not expect it to come together so well so quickly. From concept to this stage took about 12 hours.

Refining the picture

The image was nice, but i did feel it missed some minimal information. I caved and figured it would be cool to add the title the ai came up with and the date on top of it. Since this isn’t a responsive website I can just use static values for the position. Also I’m lazy.

1 | image = Image.open(BytesIO(b64decode(data))) |

I also modified the OpenAI prompt to generate a title for the image based on the prompt. This is a neat way to add some detail and mystery to the day.

The MOT happened and I had some upcoming travel. I love the allegory here, as well as the classic kidney grille

It’s hard to do the images justice without seeing them on the device themselves. The E ink display looks so natural, and with the improved prompt the textures come alive well. Adding the date helps give this a calendar feel without it being a calendar.

Making it a service

I initially thought that I would just leave the Pi running 24/7 and run a cron job once a day. I even tried it but forgot some relative paths in the code so that failed. Instead, it dawned on me at some point that E ink displays only need power when refreshing. This meant, the Pi needs to just be on for the briefest of time to refresh the image, once per day.

So I just bought a power socket timer switch. It’s analogue but hey, I just want something that works. Think of it as a sort of an analogue cron job in meatspace.

I created a new system service following the instructions in this gloriously informative Stack overflow post.

1 | [Unit] |

The key here is that the script needs to run after network online is true, so services help with that. The actual shell script to run the service itself is super simple. I separated the scripts out because I was too lazy to call up functions.

1 |

|

So, it all came together in about 30 hours!

Now the timer turns the Pi on at 5am every day, keeps the power on for 15 minutes (the minimum increment in the timer switch). This is more than enough time to refresh the screen with time to spare, in most cases completing in 2min or less.

This approach also has the added benefit that if I don’t like the image I can just simply reroll it by restarting the pi by dipping the switch manually. Once the image refreshes, wait a minute and turn the power off again. Simple.

A word on costs

Now, you might think all this AI work costs money and you would be right about that. To try and curtail it, I used the OpenAI Token estimator to guesstimate my prompts and returns were about 1k tokens each. I’m using the standard image generation at 1024x1024 resolution with DALL-E so at the going rate the pricing works out to about 8 cents per pop at the going rate.

On an annulised basis this works out to an annual cost of $29.2 dollars in API charges to get my daily art. The major contributors to this is my usage of gpt4 for everything except the caption. I could further optimise the cost here by consolidating the prompt calls to one and using cheaper models, but I don’t trust the consistency of the gpt models to always give me exactly what I want. I could also probably host a better and cheaper image model somewhere, but to be honest I can’t be bothered. Also, given the recent OpenAI drama who knows if i’ll have to port it to Azure soon or something.

Future improvements

For every project 80% of the value is in 20% of the work and here it feels like enough for a nice piece of personal art. If I wanted to refine this I would probably

- Make the code better and more modular

- Log the output of the service

- Save the daily generation results to a db so I can do cool timelapses later

- Reduce the operational cost

- Come up with some remote configurator

- Negotiate with a manufacturer of the displays to drive the unit cost down

- Use a cheaper RPi like the Zero 2 W.

Doctors appointments, MOTs, Writing this blog come out really neat. It’s like a daily horoscope without any of the predictions

For now though, I’m going to enjoy my pretty pictures and chuckle at the weird and wonderful generations I get. Hope you learnt something.

If not, just poke around in the github for this and try it for yourself.

About this Post

This post is written by Ilkka Turunen, licensed under All Rights Reserved.